What is Hadoop?

Hadoop is an Apache open source framework written in java that allows distributed processing of large datasets across clusters of computers using simple programming models. The Hadoop framework application works in an environment that provides distributed storage and computation across clusters of computers. Hadoop is designed to scale up from single server to thousands of machines, each offering local computation and storage.

Hadoop Architecture

At its core, Hadoop has two major layers:

- Processing/Computation layer (MapReduce)

- Storage layer (Hadoop Distributed File System).

MapReduce

MapReduce is a parallel programming model for writing distributed applications devised at Google for efficient processing of large amounts of data (multi-terabyte data-sets), on large clusters (thousands of nodes) of commodity hardware in a reliable, fault-tolerant manner. The MapReduce program runs on Hadoop which is an Apache open-source framework.

Hadoop Distributed File System

The Hadoop Distributed File System (HDFS) is based on the Google File System (GFS) and provides a distributed file system that is designed to run on commodity hardware. It has many similarities with existing distributed file systems. However, the differences from other distributed file systems are significant. It is highly fault-tolerant and is designed to be deployed on low-cost hardware. It provides high throughput access to application data and is suitable for applications having large datasets.

Apart from the above-mentioned two core components, Hadoop framework also includes the following two modules −

Hadoop Common:

These are Java libraries and utilities required by other Hadoop modules.

Hadoop YARN:

This is a framework for job scheduling and cluster resource management.

Installation Process

Creating a User

At the beginning, it is recommended to create a separate user for Hadoop to isolate Hadoop file system from Unix file system

$ mp@ubnutu:sudo addgroup hadoop

[sudo] password for mp:

Adding group `hadoop' (GID 1001) ...

Done.

$ mp@ubnutu: sudo adduser --ingroup hadoop hadoop

Adding user `hadoop' ...

Adding new user `hadoop' (1001) with group `hadoop' ...

Creating home directory `/home/hadoop' ...

Copying files from `/etc/skel' ...

Enter new UNIX password:

Retype new UNIX password:

passwd: password updated successfully

Changing the user information for hadoop

Enter the new value, or press ENTER for the default

Full Name []:

Room Number []:

Work Phone []:

Home Phone []:

Other []:

Is the information correct? [Y/n] y

$ mp@ubnutu:hadoop-3.2.1 sudo adduser hadoop sudo

Adding user `hadoop' to group `sudo' ...

Adding user hadoop to group sudo

Done.

$ mp@ubnutu: su hadoop

SSH Setup and Key Generation

SSH setup is required to do different operations on a cluster such as starting, stopping, distributed daemon shell operations. To authenticate different users of Hadoop, it is required to provide public/private key pair for a Hadoop user and share it with different users.

Now let’s generate key and give read/write permission

hadoop@ubuntu:~$ ssh-keygen -t rsa -P ""

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):

Created directory '/home/hadoop/.ssh'.

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:NAjpt4EY3GyQeant1jUjdrrR5BmEw4j5/8Uod+xAxVI hadoop@ubuntu

The key's randomart image is:

+---[RSA 2048]----+

| ..O.= . oE |

| B O.+.o o |

| X ..ooo |

| o = =.O. |

| . = %SO |

| o B B = |

| . * = |

| . . . |

| |

+----[SHA256]-----+

hadoop@ubuntu:~$ cat /home/hadoop/.ssh/id_rsa.pub >> /home/hadoop/.ssh/authorized_keys

hadoop@ubuntu:~$ ssh localhost

The authenticity of host 'localhost (127.0.0.1)' can't be established.

ECDSA key fingerprint is SHA256:P74KhDSAe9CFQGN1IJjhv84n6NpPU+huKUol/7IAGDk.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'localhost' (ECDSA) to the list of known hosts.

Welcome to Ubuntu 18.04.4 LTS (GNU/Linux 5.3.0-45-generic x86_64)

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by

applicable law.

hadoop@ubuntu:~$

I have Assume you already have JAVA installed.

Just Make sure you add JAVA_HOME in hadoop-env.sh

Download Hadoop

You can download it from here Apache Hadoop.

Hadoop Operation Modes

Hadoop Comes three modes which are as the following:

1. Local/Standalone Mode: After downloading Hadoop in your system, by default, it is configured in a standalone mode and can be run as a single java process.

2. Pseudo Distributed Mode: It is a distributed simulation on single machine. Each Hadoop daemon such as hdfs, yarn, MapReduce etc., will run as a separate java process. This mode is useful for development.

3. Fully Distributed Mode: This mode is fully distributed with minimum two or more machines as a cluster. We will come across this mode in detail in the coming chapters.

Note: We will be covering “Pseudo Distributed” Installation in here which is similar to the Fully Distributed.

- Setup environment variable:

vi ~/.bashrc

export HADOOP_HOME=/home/mp/Downloads/hadoop-3.2.1 ## <<< This is the location of your hadoop folder.

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

export HADOOP_INSTALL=$HADOOP_HOME

$ source ~/.bashrc

- Hadoop Configuration

$ cd HADOOP_HOME/etc/hadoop # <--- all Hadoop configuration can be found here

#### Add this configuration

$ vi core-site.xml ### <----- This file contains details about ports and Hadoop instance and memory

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://172.16.131.138:9000</value>

</property>

</configuration>

$ vi hdfs-site.xml ###### <----- This file contains information regardin replication factor, namenode, datanode

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>file:///home/hadoop/hadoop_mehdi/hdfs/namenode</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>file:///home/hadoop/hadoop_mehdi/hdfs/datanode</value>

</property>

</configuration>

Note: Please change those config file according to your infra.

$ vi yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

$ vim mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

- Verifying Hadoop Installation

First Name Node Setup

hadoop@ubuntu:~$ hdfs namenode -format

WARNING: /home/mp/Downloads/hadoop-3.2.1/logs does not exist. Creating.

2020-04-08 08:56:17,715 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = ubuntu/127.0.1.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 3.2.1

STARTUP_MSG: classpath = /home/mp/Downloads/hadoop-3.2.1/etc/hadoop:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/metrics-core-3.2.4.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/javax.servlet-api-3.1.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/kerb-util-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/audience-annotations-0.5.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/commons-logging-1.1.3.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jul-to-slf4j-1.7.25.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/commons-collections-3.2.2.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jersey-core-1.19.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/nimbus-jose-jwt-4.41.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/hadoop-annotations-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/error_prone_annotations-2.2.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/re2j-1.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/kerb-identity-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/animal-sniffer-annotations-1.17.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/log4j-1.2.17.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/hadoop-auth-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/htrace-core4-4.1.0-incubating.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jetty-webapp-9.3.24.v20180605.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/kerb-server-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/kerby-util-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jaxb-api-2.2.11.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/avro-1.7.7.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/accessors-smart-1.2.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/zookeeper-3.4.13.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jersey-server-1.19.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jackson-annotations-2.9.8.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/curator-recipes-2.13.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/httpclient-4.5.6.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jettison-1.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/commons-net-3.6.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/kerb-core-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/commons-text-1.4.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/curator-client-2.13.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/kerb-client-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/kerb-crypto-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/kerb-admin-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/json-smart-2.3.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/curator-framework-2.13.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jetty-security-9.3.24.v20180605.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/kerby-config-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/slf4j-api-1.7.25.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jetty-server-9.3.24.v20180605.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/commons-configuration2-2.1.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/failureaccess-1.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/gson-2.2.4.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/commons-io-2.5.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/snappy-java-1.0.5.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jetty-io-9.3.24.v20180605.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jackson-databind-2.9.8.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/netty-3.10.5.Final.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/asm-5.0.4.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/kerby-xdr-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jsr311-api-1.1.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/kerb-common-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jersey-servlet-1.19.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/stax2-api-3.1.4.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/commons-codec-1.11.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/kerby-pkix-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jackson-core-2.9.8.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/paranamer-2.3.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jetty-http-9.3.24.v20180605.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/commons-compress-1.18.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/commons-beanutils-1.9.3.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/j2objc-annotations-1.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jetty-util-9.3.24.v20180605.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/httpcore-4.4.10.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jetty-xml-9.3.24.v20180605.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/token-provider-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/commons-lang3-3.7.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jetty-servlet-9.3.24.v20180605.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jsch-0.1.54.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jsp-api-2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/checker-qual-2.5.2.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jsr305-3.0.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jersey-json-1.19.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/guava-27.0-jre.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/commons-math3-3.1.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/dnsjava-2.1.7.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/kerby-asn1-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/kerb-simplekdc-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/woodstox-core-5.0.3.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/lib/commons-cli-1.2.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/hadoop-common-3.2.1-tests.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/hadoop-nfs-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/hadoop-common-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/common/hadoop-kms-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jackson-jaxrs-1.9.13.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/javax.servlet-api-3.1.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/kerb-util-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/audience-annotations-0.5.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/okhttp-2.7.5.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-collections-3.2.2.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jersey-core-1.19.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/nimbus-jose-jwt-4.41.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/hadoop-annotations-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/error_prone_annotations-2.2.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/re2j-1.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/kerb-identity-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/json-simple-1.1.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/animal-sniffer-annotations-1.17.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/hadoop-auth-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/htrace-core4-4.1.0-incubating.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jetty-webapp-9.3.24.v20180605.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/kerb-server-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/kerby-util-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jackson-xc-1.9.13.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jaxb-api-2.2.11.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/avro-1.7.7.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/accessors-smart-1.2.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/zookeeper-3.4.13.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jersey-server-1.19.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/netty-all-4.0.52.Final.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jackson-annotations-2.9.8.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/curator-recipes-2.13.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/httpclient-4.5.6.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jettison-1.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-net-3.6.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/kerb-core-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-text-1.4.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/curator-client-2.13.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/kerb-client-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/kerb-crypto-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/kerb-admin-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/json-smart-2.3.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/curator-framework-2.13.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jetty-security-9.3.24.v20180605.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/kerby-config-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jetty-server-9.3.24.v20180605.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-configuration2-2.1.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/failureaccess-1.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jaxb-impl-2.2.3-1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/gson-2.2.4.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-io-2.5.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/snappy-java-1.0.5.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jetty-io-9.3.24.v20180605.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jackson-databind-2.9.8.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/okio-1.6.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/netty-3.10.5.Final.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jetty-util-ajax-9.3.24.v20180605.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/asm-5.0.4.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/kerby-xdr-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jsr311-api-1.1.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/kerb-common-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jersey-servlet-1.19.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/stax2-api-3.1.4.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-codec-1.11.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/kerby-pkix-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jackson-core-2.9.8.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/paranamer-2.3.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jetty-http-9.3.24.v20180605.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-compress-1.18.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-beanutils-1.9.3.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/j2objc-annotations-1.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jetty-util-9.3.24.v20180605.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/httpcore-4.4.10.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jetty-xml-9.3.24.v20180605.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/token-provider-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-lang3-3.7.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jetty-servlet-9.3.24.v20180605.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jsch-0.1.54.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/checker-qual-2.5.2.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jersey-json-1.19.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/guava-27.0-jre.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-math3-3.1.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/dnsjava-2.1.7.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/kerby-asn1-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/kerb-simplekdc-1.0.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/jcip-annotations-1.0-1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/woodstox-core-5.0.3.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-httpfs-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-rbf-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-nfs-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-rbf-3.2.1-tests.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-3.2.1-tests.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-client-3.2.1-tests.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-native-client-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-native-client-3.2.1-tests.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-client-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/mapreduce/lib/junit-4.11.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-uploader-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-nativetask-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.2.1-tests.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-app-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-common-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-core-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/metrics-core-3.2.4.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/guice-servlet-4.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/snakeyaml-1.16.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/jackson-jaxrs-base-2.9.8.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/mssql-jdbc-6.2.1.jre7.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/javax.inject-1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/guice-4.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/jersey-guice-1.19.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/java-util-1.9.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/bcpkix-jdk15on-1.60.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/aopalliance-1.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/fst-2.50.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/jackson-jaxrs-json-provider-2.9.8.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/json-io-2.5.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/swagger-annotations-1.5.4.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/objenesis-1.0.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/jersey-client-1.19.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/bcprov-jdk15on-1.60.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/ehcache-3.3.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/HikariCP-java7-2.4.12.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/lib/jackson-module-jaxb-annotations-2.9.8.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-api-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-server-timeline-pluginstorage-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-client-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-server-nodemanager-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-services-core-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-submarine-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-common-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-services-api-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-server-common-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-server-router-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-server-web-proxy-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-server-tests-3.2.1.jar:/home/mp/Downloads/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-registry-3.2.1.jar

STARTUP_MSG: build = https://gitbox.apache.org/repos/asf/hadoop.git -r b3cbbb467e22ea829b3808f4b7b01d07e0bf3842; compiled by 'rohithsharmaks' on 2019-09-10T15:56Z

STARTUP_MSG: java = 1.8.0_241

************************************************************/

2020-04-08 08:56:17,745 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

2020-04-08 08:56:17,916 INFO namenode.NameNode: createNameNode [-format]

2020-04-08 08:56:18,139 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Formatting using clusterid: CID-3f1ae3d6-10c4-487d-8c62-e0cf678bf50b

2020-04-08 08:56:19,004 INFO namenode.FSEditLog: Edit logging is async:true

2020-04-08 08:56:19,047 INFO namenode.FSNamesystem: KeyProvider: null

2020-04-08 08:56:19,049 INFO namenode.FSNamesystem: fsLock is fair: true

2020-04-08 08:56:19,049 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false

2020-04-08 08:56:19,065 INFO namenode.FSNamesystem: fsOwner = hadoop (auth:SIMPLE)

2020-04-08 08:56:19,065 INFO namenode.FSNamesystem: supergroup = supergroup

2020-04-08 08:56:19,065 INFO namenode.FSNamesystem: isPermissionEnabled = true

2020-04-08 08:56:19,065 INFO namenode.FSNamesystem: HA Enabled: false

2020-04-08 08:56:19,170 INFO common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling

2020-04-08 08:56:19,227 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit: configured=1000, counted=60, effected=1000

2020-04-08 08:56:19,227 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

2020-04-08 08:56:19,233 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

2020-04-08 08:56:19,233 INFO blockmanagement.BlockManager: The block deletion will start around 2020 Apr 08 08:56:19

2020-04-08 08:56:19,235 INFO util.GSet: Computing capacity for map BlocksMap

2020-04-08 08:56:19,235 INFO util.GSet: VM type = 64-bit

2020-04-08 08:56:19,246 INFO util.GSet: 2.0% max memory 869.5 MB = 17.4 MB

2020-04-08 08:56:19,246 INFO util.GSet: capacity = 2^21 = 2097152 entries

2020-04-08 08:56:19,257 INFO blockmanagement.BlockManager: Storage policy satisfier is disabled

2020-04-08 08:56:19,257 INFO blockmanagement.BlockManager: dfs.block.access.token.enable = false

2020-04-08 08:56:19,268 INFO Configuration.deprecation: No unit for dfs.namenode.safemode.extension(30000) assuming MILLISECONDS

2020-04-08 08:56:19,268 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

2020-04-08 08:56:19,268 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 0

2020-04-08 08:56:19,268 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000

2020-04-08 08:56:19,270 INFO blockmanagement.BlockManager: defaultReplication = 1

2020-04-08 08:56:19,270 INFO blockmanagement.BlockManager: maxReplication = 512

2020-04-08 08:56:19,270 INFO blockmanagement.BlockManager: minReplication = 1

2020-04-08 08:56:19,270 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

2020-04-08 08:56:19,270 INFO blockmanagement.BlockManager: redundancyRecheckInterval = 3000ms

2020-04-08 08:56:19,270 INFO blockmanagement.BlockManager: encryptDataTransfer = false

2020-04-08 08:56:19,270 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

2020-04-08 08:56:19,342 INFO namenode.FSDirectory: GLOBAL serial map: bits=29 maxEntries=536870911

2020-04-08 08:56:19,342 INFO namenode.FSDirectory: USER serial map: bits=24 maxEntries=16777215

2020-04-08 08:56:19,342 INFO namenode.FSDirectory: GROUP serial map: bits=24 maxEntries=16777215

2020-04-08 08:56:19,342 INFO namenode.FSDirectory: XATTR serial map: bits=24 maxEntries=16777215

2020-04-08 08:56:19,356 INFO util.GSet: Computing capacity for map INodeMap

2020-04-08 08:56:19,356 INFO util.GSet: VM type = 64-bit

2020-04-08 08:56:19,357 INFO util.GSet: 1.0% max memory 869.5 MB = 8.7 MB

2020-04-08 08:56:19,357 INFO util.GSet: capacity = 2^20 = 1048576 entries

2020-04-08 08:56:19,357 INFO namenode.FSDirectory: ACLs enabled? false

2020-04-08 08:56:19,357 INFO namenode.FSDirectory: POSIX ACL inheritance enabled? true

2020-04-08 08:56:19,357 INFO namenode.FSDirectory: XAttrs enabled? true

2020-04-08 08:56:19,357 INFO namenode.NameNode: Caching file names occurring more than 10 times

2020-04-08 08:56:19,364 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: false, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true, maxSnapshotLimit: 65536

2020-04-08 08:56:19,366 INFO snapshot.SnapshotManager: SkipList is disabled

2020-04-08 08:56:19,370 INFO util.GSet: Computing capacity for map cachedBlocks

2020-04-08 08:56:19,370 INFO util.GSet: VM type = 64-bit

2020-04-08 08:56:19,370 INFO util.GSet: 0.25% max memory 869.5 MB = 2.2 MB

2020-04-08 08:56:19,370 INFO util.GSet: capacity = 2^18 = 262144 entries

2020-04-08 08:56:19,384 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

2020-04-08 08:56:19,384 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

2020-04-08 08:56:19,384 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

2020-04-08 08:56:19,389 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

2020-04-08 08:56:19,389 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

2020-04-08 08:56:19,397 INFO util.GSet: Computing capacity for map NameNodeRetryCache

2020-04-08 08:56:19,397 INFO util.GSet: VM type = 64-bit

2020-04-08 08:56:19,397 INFO util.GSet: 0.029999999329447746% max memory 869.5 MB = 267.1 KB

2020-04-08 08:56:19,397 INFO util.GSet: capacity = 2^15 = 32768 entries

2020-04-08 08:56:19,444 INFO namenode.FSImage: Allocated new BlockPoolId: BP-623550155-127.0.1.1-1586361379433

2020-04-08 08:56:19,473 INFO common.Storage: Storage directory /home/hadoop/hadoop_mehdi/hdfs/namenode has been successfully formatted.

2020-04-08 08:56:19,543 INFO namenode.FSImageFormatProtobuf: Saving image file /home/hadoop/hadoop_mehdi/hdfs/namenode/current/fsimage.ckpt_0000000000000000000 using no compression

2020-04-08 08:56:19,740 INFO namenode.FSImageFormatProtobuf: Image file /home/hadoop/hadoop_mehdi/hdfs/namenode/current/fsimage.ckpt_0000000000000000000 of size 398 bytes saved in 0 seconds .

2020-04-08 08:56:19,780 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

2020-04-08 08:56:19,805 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid=0 when meet shutdown.

2020-04-08 08:56:19,806 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at ubuntu/127.0.1.1

************************************************************/

Second Verifying Hadoop dfs

hhadoop@ubuntu:~/hadoop_mehdi$ start-dfs.sh

Starting namenodes on [localhost]

Starting datanodes

Starting secondary namenodes [ubuntu]

2020-04-08 22:43:50,407 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

You can ignore the Warning. Let’s Verifying Yarn Script

hadoop@ubuntu:~/hadoop_mehdi$ start-yarn.sh

Starting resourcemanager

Starting nodemanagers

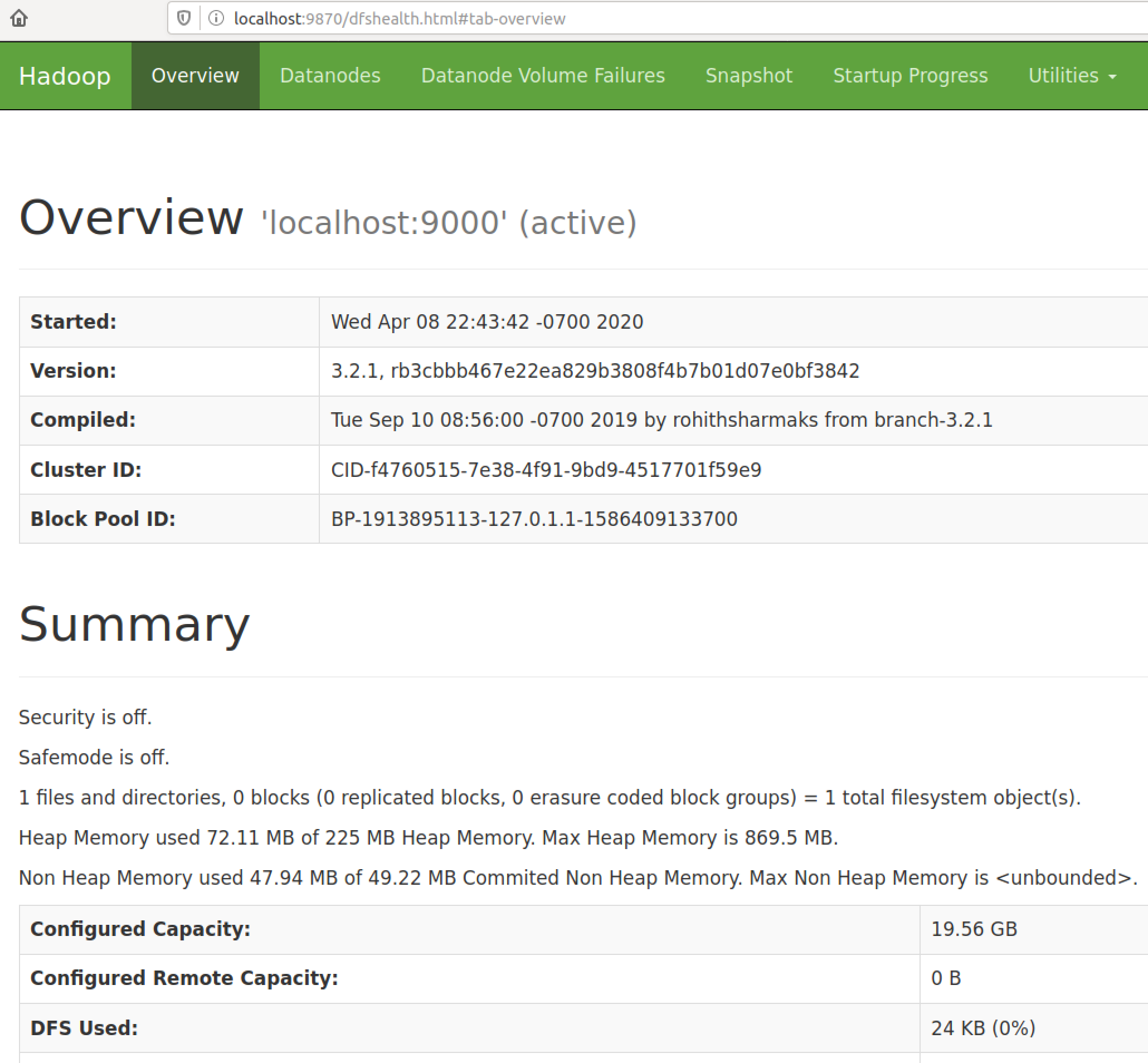

Accessing Hadoop on Browser

The default port number to access Hadoop is 50070 but we have used a different port as on Production environment they may use another port.

http://localhost:9870

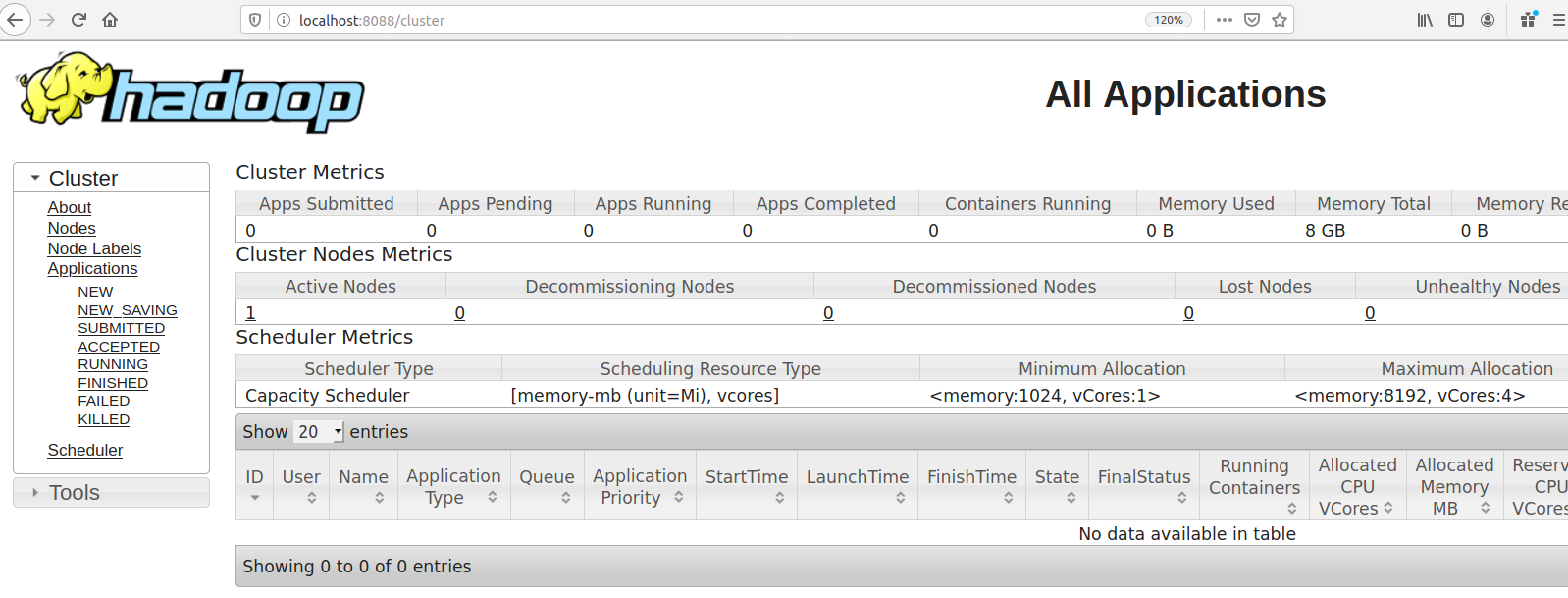

Verify All Applications for Cluster

The default port number to access all applications of cluster is 8088. Use the following url to visit this service.

http://localhost:8088/

For accessing HDFS NameNode web interface

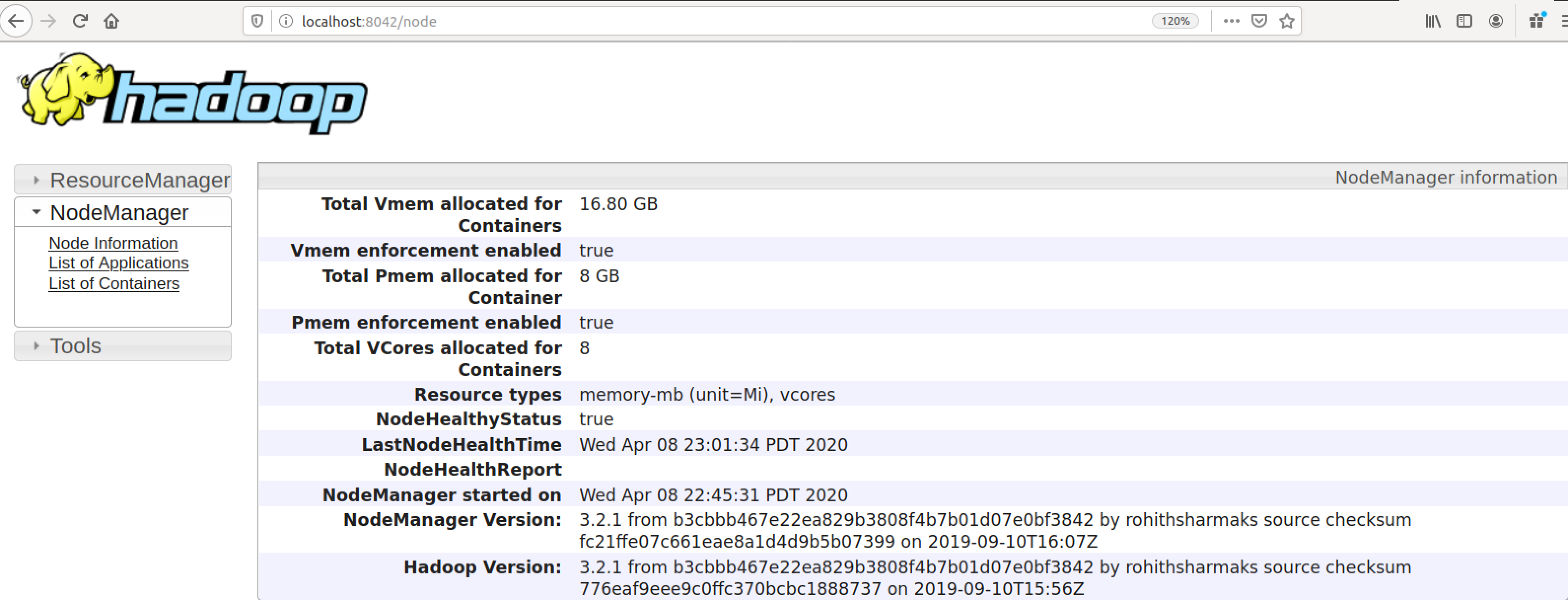

http://localhost:8042/